AI agents are here and they’re only getting smarter.

From customer support chatbots to autonomous research assistants, AI agents are transforming how work gets done. Powered by reasoning, tool use, and multi-step planning, these systems represent the next major evolution in generative AI. But with that evolution comes complexity: more moving parts, more failure points, and a greater need for oversight.

Today, Arthur is announcing new platform capabilities purpose-built for agentic AI to give teams the tools they need to monitor, trace, and improve their most advanced LLM-driven workflows.

Why Agentic AI Needs Better Observability

Modern AI agents don’t just generate text, they reason, reflect, plan, act, and learn. They call various tools, chain together multi-step decisions, collaborate with other agents, and adapt based on feedback. Whether you’re orchestrating with LangChain, AutoGen, or building a custom framework, the result is the same: complex, opaque systems with high stakes.

When something goes wrong in a multi-agent workflow, where did it break? Was it a bad tool call? Outdated memory? A hallucinated step in the plan?

Until now, those answers have been hard to surface.

New: Tracing & Insights for Agentic Workflows

Arthur now lets you trace every step an agent takes from first prompt to final action. This new capability provides:

- Execution Tracing: Visualize how input moves through prompts, tool calls, memory retrieval, and decisions.

- Failure Detection: Quickly pinpoint the exact step that broke, stalled, or veered off course.

- Granular Debugging: See intermediate outputs, latency, token usage, and more across the agent’s reasoning process.

- Performance Optimization: Analyze time spent, frequency of tool calls, and prompt effectiveness.

Whether you’re debugging a misfiring agent or optimizing a high-volume pipeline, this tracing unlocks unprecedented visibility into agent behavior.

Real-Time Metrics for Agent Monitoring

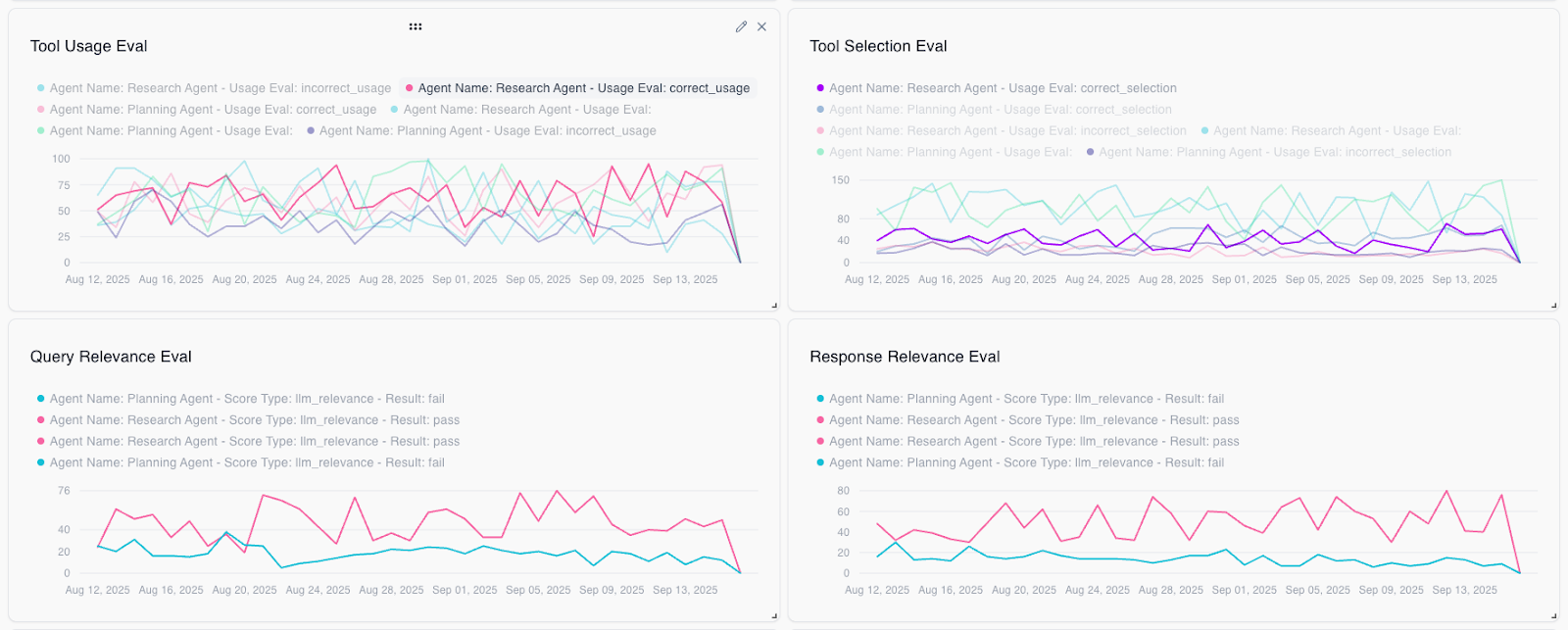

Our new Agent Monitoring Dashboard offers a tailored experience for teams deploying and evaluating LLM agents in production. This includes:

- Tool Selection Accuracy: Was the right tool used at the right time?

- Response Relevance: How well does the agent’s response align with the user’s intent?

- Latency & Token Usage: Track performance and cost drivers.

Onboarding is guided, contextual, and designed to support popular orchestration tools like LangChain, DSPy, and AutoGen so you can get started fast and confidently.

From Black Box to Glass Box

Agentic AI demands more than traditional monitoring. It requires context-aware debugging, continuous evaluation, and robust safety controls. Arthur’s new capabilities turn your AI systems from a black box into a glass box one that’s observable, optimizable, and governable.

Whether you’re scaling RAG-powered agents, automating internal workflows, or building autonomous research copilots, Arthur gives you the visibility and control you need.

The next generation of AI is agentic. And now, it’s observable.

Get started today or book a demo to see agent monitoring in action.